|

Jiachen Liu I'm currently a machine learning engineer at Tiktok in San Jose. I received my PhD in Information Sciences and Technology from Penn State University in 2024, where I am fortunately to be advised by Dr. Sharon X. Huang. Prior to that, I did my bachelor degree in Beihang University in China. My research interests and experience include 3D vision and generative AI. Specifically, I am interested in structured 3D reconstruction, scene understanding and their downstream applications, as well as generalizable 3D recontruction across various environments. Email / Google Scholar / Linkedin / Github |

|

Selected ResearchMy primary interest lies in 3D vision and generative AI, including structured 3D reconstruction, scene understanding, generalizable 3D vision, scene layout generation and 3D-aware asset generation. (* indicates equal contribution.) |

|

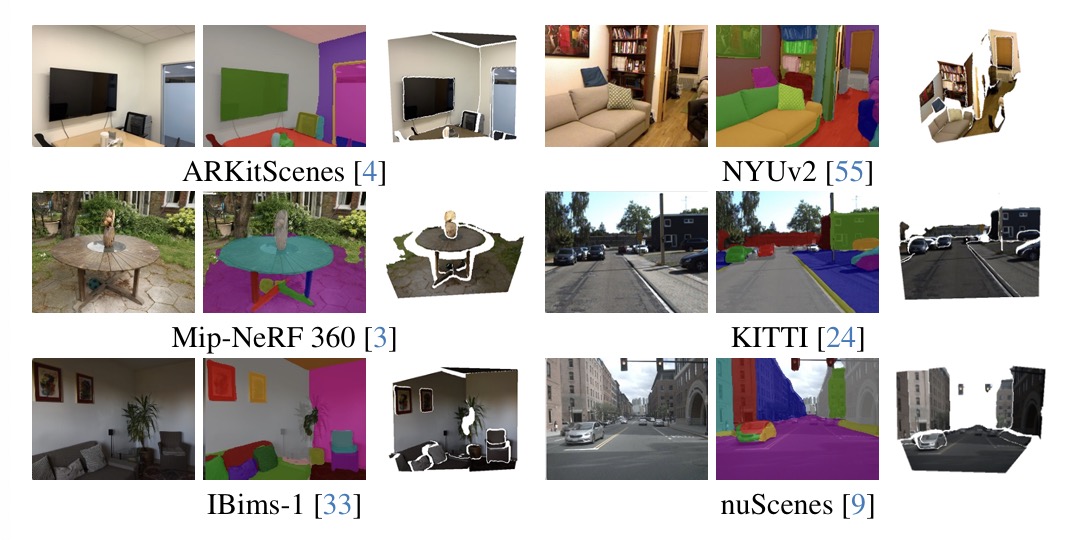

Towards In-the-wild 3D Plane Reconstruction from a Single Image

Jiachen Liu*, Rui Yu*, Sili Chen, Sharon X. Huang, Hengkai Guo CVPR, 2025 (Highlight Presentation) code / arXiv We aim to propose the problem of in-the-wild, zero-shot 3D plane reconstruction. To this end, (1) We have constructed a large-scale benchmark dataset with high-quality dense planar annotations from multiple RGB-D datasets sampled across various indoor and outdoor environments. (2) We propose a Transformer-based framework on mixed-dataset training with a disentangled, classification-then-regression normal and offset learning paradigm to effectively handle the challenge raised in scale invariance across diverse indoor and outdoor scenes. Our model has demonstrated state-of-the-art planar reconstruction performance in terms of both accuracy and generalizability. |

|

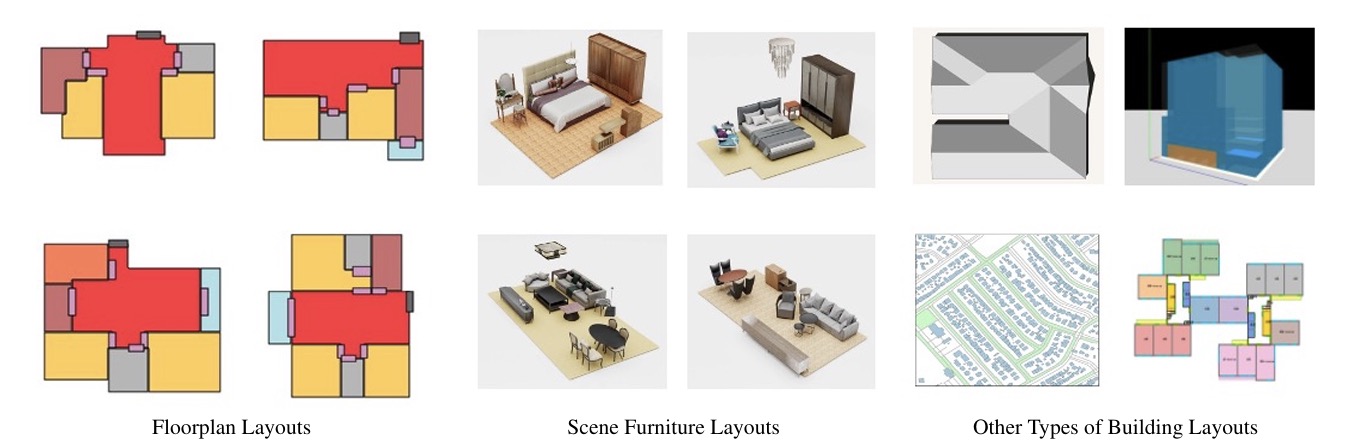

Computer-Aided Layout Generation for Building Design: A Review

Jiachen Liu, Yuan Xue, Haomiao Ni, Rui Yu, Zihan Zhou, Sharon X. Huang; CVMJ, 2025 arXiv We present a comprehensive survey on current methods on floorplan layout generation, scene synthesis and other miscellaneous layout generation topics. We also discuss valuable future perspectives to work on in this area. |

|

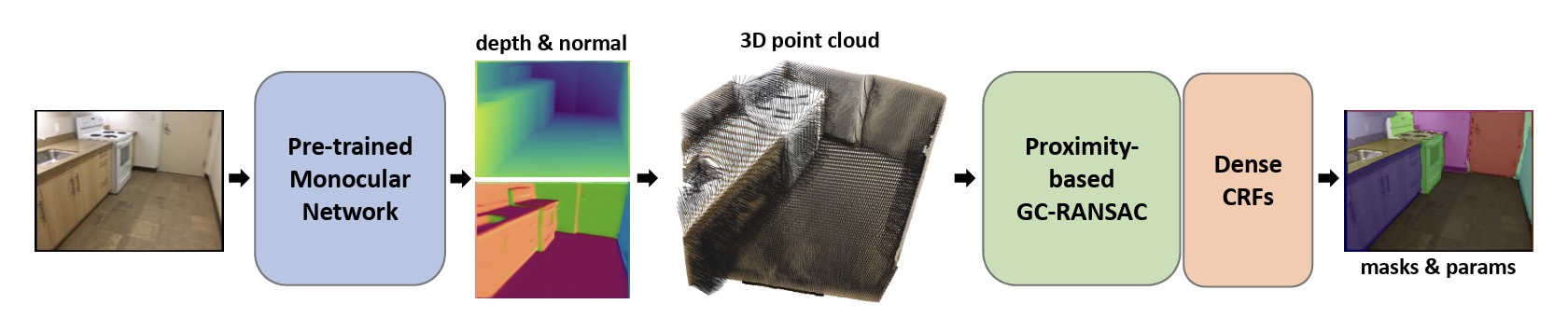

MonoPlane: Exploiting Monocular Geometric Cues for Generalizable 3D Plane Reconstruction

Wang Zhao*, Jiachen Liu*, Sheng Zhang, Yishu Li, Sili Chen, Sharon X. Huang, Yong-Jin Liu, Hengkai Guo; IROS, 2024 (Oral Presentation) code (Coming soon) / arXiv Leverage pretrained geometric foundation models (depth and normal) for zero-shot monocular plane reconstruction. |

|

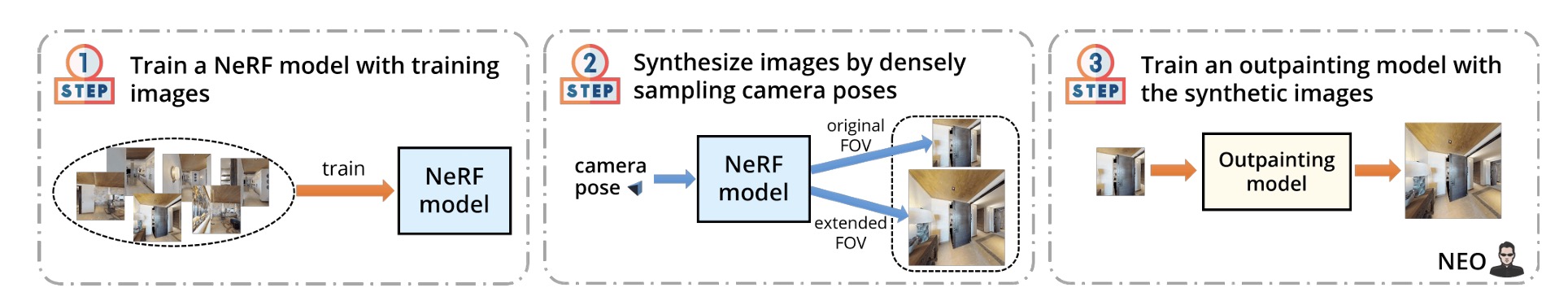

NeRF-Enhanced Outpainting for Faithful Field-of-View Extrapolation

Rui Yu*, Jiachen Liu*, Zihan Zhou, Sharon X. Huang ICRA, 2024 arXiv We use NeRF to represent an indoor scene for novel view synthesis to augment the training set, then train a view extrapolation network on the densely sampled synthesized data to improve the model's view extrapolation capacity in a 3D coherent manner. |

|

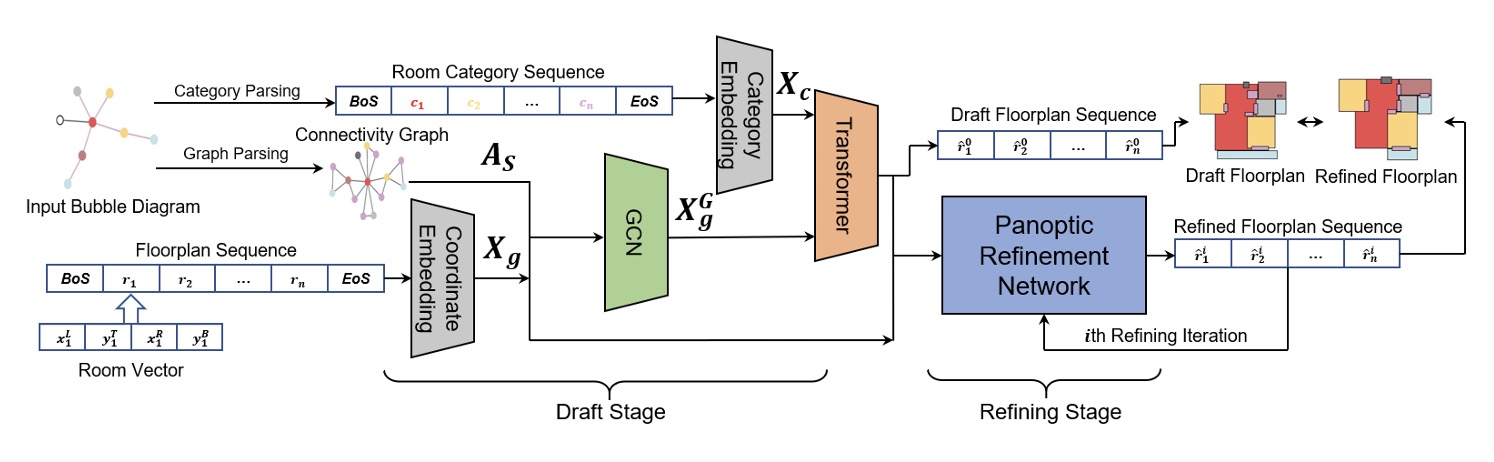

End-to-end Graph-constrained Vectorized Floorplan Generation with Panoptic Refinement

Jiachen Liu*, Yuan Xue, Jose Duarte, Krishnendra Shekhawat, Zihan Zhou, Xiaolei Huang ECCV, 2022 arXiv We propose a Transformer-based two-stage framework to effecitvely generate vectorized interior floorplan layouts in an end-to-end manner. |

|

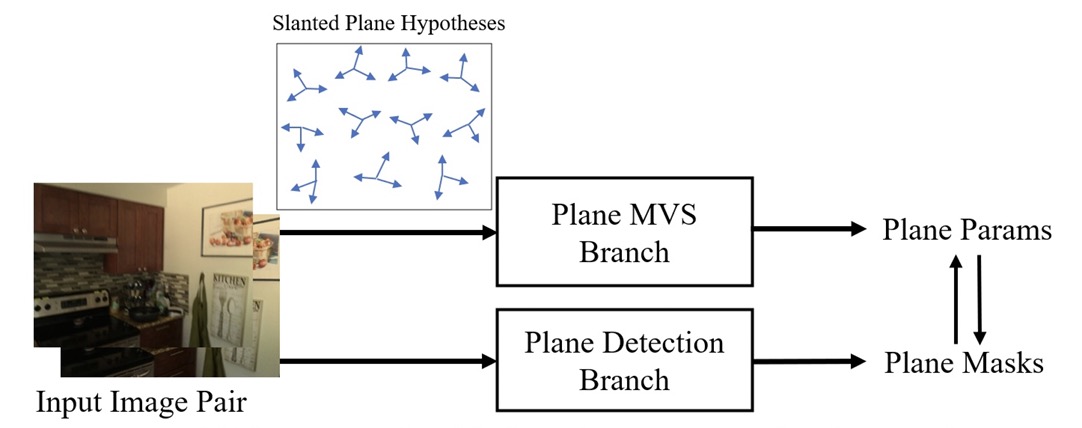

PlaneMVS: 3D Plane Reconstruction from Multi-View Stereo

Jiachen Liu, Pan Ji, Nitin Bansal, Changjiang Cai, Qingan Yan, Xiaolei Huang, Yi Xu CVPR, 2022 code / arXiv We present a multi-view plane reconstruction framework based on the proposed slanted plane hypothesis, where we achieve state-of-the-art plane reconstruction as well as superior multi-view depth estimation across multiple benchmark datasets. |

Academic ServiceI serve as a regular reviewer for top-tier CV/ML conferences, such as CVPR, ICCV, ECCV, NeurIPS, ICLR, ICML, etc. |

|

© Jiachen Liu | Last updated: June 2025 Design and source code from Jon Barron's website |